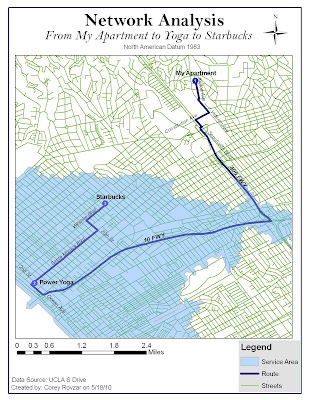

For this lab, the problems in question were 1. What is the best path to take to my yoga studio and then to Starbucks? and 2. Does the studio I go to serve the area where I live? My Thursday morning route was analyzed to find the optimal route. My route includes starting at my apartment, stopping at the yoga studio, and then ending at a Starbucks on Wilshire. Additionally, the service area for my yoga studio was generated to evaluate whether or not the studio served the area where I live. The parameters for the optimal route were kept at their default values: the impedance was time (minutes), U-turns were allowed everywhere, and the output shape type was true shape. The parameter values for time included: 10, 15, 20, 25, 30, 35, 50, and 65 miles per hour. Lastly, the search tolerance was set at 5000 meters. Setting the impedance as time generated the fastest route based on speed limits. Similarly, the service area was created using default parameters: the impedance was time with default breaks of 5, direction was away from the facility, u-turns were allowed everywhere, restrictions were one way, and the parameter values and search tolerance were the same as those used for generating the optimal route. Distance units for both were set to miles. Setting the impedance as time and default breaks for the service area to 5, resulted in a service area that was restricted to areas within 5 minutes of the studio.

According to the optimal route from my apartment to the yoga studio, it is quickest for me to take Kelton, to Veteran, to Constitution, to Sepulveda, to the 405, to the 10, to Ocean, to Santa Monica, and lastly to 2nd to end at 1410 2nd St. From the studio to Starbucks, it is quickest to take 2nd, to Santa Monica, to 20th, and finally to Wilshire to end at 2525 Wilshire Blvd. Although this route should be fastest due to a higher speed limit on the freeways, ultimately it is dependent on traffic. Depending on the time that I leave, it may be faster to take side streets due to higher congestion on the freeways. The network analysis could be improved by having the capability to analyze traffic in addition to local speed limits. The service area for the yoga studio included everything that was less than 5 minutes away. Based on these results, my apartment is more than 5 minutes away from the studio. Therefore, it could be argued that it is not practical for me to drive all the way to Santa Monica for yoga when there are closer yoga studios that serve my area. However, the network analysis only accounts for travel costs and not other factors that may make it worthwhile to travel farther. The yoga studio I go to happens to be donation-based, meaning I can pay however much I have for the class without being locked into a plan. Because of this, it is worth it for me to pay the transportation costs of time and gas money to go to Santa Monica versus paying more for the classes closer to my apartment. The results generated by the network analysis would be realistic for suburban areas and small cities that don’t experience large amounts of traffic. However, for Los Angeles and other major cities, the network analysis would only really be reliable during hours where there is minimal traffic. Adding a traffic analysis component to the network analysis would increase the accuracy of the network analysis for highly congested areas such as Los Angeles.